Extrapolation of the expansion of the Universe backwards in time using general relativity yields an infinite density and temperature at a finite time in the past. This singularity signals the breakdown of general relativity. How closely we can extrapolate towards the singularity is debated—certainly no closer than the end of the Planck epoch. This singularity is sometimes called "the Big Bang", but the term can also refer to the early hot, dense phase itself, which can be considered the "birth" of our Universe. Based on measurements of the expansion using Type Ia supernovae, measurements of temperature fluctuations in the cosmic microwave background, and measurements of the correlation function of galaxies, the Universe has a calculated age of 13.75 ± 0.11 billion years. The agreement of these three independent measurements strongly supports the ΛCDM model that describes in detail the contents of the Universe.

The earliest phases of the Big Bang are subject to much speculation. In the most common models, the Universe was filled homogeneously and isotropically with an incredibly high energy density, huge temperatures and pressures, and was very rapidly expanding and cooling. Approximately 10−37 seconds into the expansion, a phase transition caused a cosmic inflation, during which the Universe grew exponentially. After inflation stopped, the Universe consisted of a quark–gluon plasma, as well as all other elementary particles. Temperatures were so high that the random motions of particles were at relativistic speeds, and particle–antiparticle pairs of all kinds were being continuously created and destroyed in collisions. At some point an unknown reaction called baryogenesis violated the conservation of baryon number, leading to a very small excess of quarks and leptons over antiquarks and antileptons—of the order of one part in 30 million. This resulted in the predominance of matter over antimatter in the present Universe.

The Universe continued to grow in size and fall in temperature, hence the typical energy of each particle was decreasing. Symmetry breaking phase transitions put the fundamental forces of physics and the parameters of elementary particles into their present form.[39] After about 10−11 seconds, the picture becomes less speculative, since particle energies drop to values that can be attained in particle physics experiments. At about 10−6 seconds, quarks and gluons combined to form baryons such as protons and neutrons. The small excess of quarks over antiquarks led to a small excess of baryons over antibaryons. The temperature was now no longer high enough to create new proton–antiproton pairs (similarly for neutrons–antineutrons), so a mass annihilation immediately followed, leaving just one in 1010 of the original protons and neutrons, and none of their antiparticles. A similar process happened at about 1 second for electrons and positrons. After these annihilations, the remaining protons, neutrons and electrons were no longer moving relativistically and the energy density of the Universe was dominated by photons (with a minor contribution from neutrinos).

A few minutes into the expansion, when the temperature was about a billion (one thousand million; 109; SI prefix giga-) kelvin and the density was about that of air, neutrons combined with protons to form the Universe's deuterium and helium nuclei in a process called Big Bang nucleosynthesis.[40] Most protons remained uncombined as hydrogen nuclei. As the Universe cooled, the rest mass energy density of matter came to gravitationally dominate that of the photon radiation. After about 379,000 years the electrons and nuclei combined into atoms (mostly hydrogen); hence the radiation decoupled from matter and continued through space largely unimpeded. This relic radiation is known as the cosmic microwave background radiation.

The Hubble Ultra Deep Field showcases galaxies from an ancient era when the Universe was younger, denser, and warmer according to the Big Bang theory.

Over a long period of time, the slightly denser regions of the nearly uniformly distributed matter gravitationally attracted nearby matter and thus grew even denser, forming gas clouds, stars, galaxies, and the other astronomical structures observable today. The details of this process depend on the amount and type of matter in the Universe. The four possible types of matter are known as cold dark matter, warm dark matter, hot dark matter and baryonic matter. The best measurements available (from WMAP) show that the data is well-fit by a Lambda-CDM model in which dark matter is assumed to be cold (warm dark matter is ruled out by early reionization), and is estimated to make up about 23% of the matter/energy of the universe, while baryonic matter makes up about 4.6%. In an "extended model" which includes hot dark matter in the form of neutrinos, then if the "physical baryon density" Ωbh2 is estimated at about 0.023 (this is different from the 'baryon density' Ωb expressed as a fraction of the total matter/energy density, which as noted above is about 0.046), and the corresponding cold dark matter density Ωch2 is about 0.11, the corresponding neutrino density Ωvh2 is estimated to be less than 0.0062.

Independent lines of evidence from Type Ia supernovae and the CMB imply that the Universe today is dominated by a mysterious form of energy known as dark energy, which apparently permeates all of space. The observations suggest 73% of the total energy density of today's Universe is in this form. When the Universe was very young, it was likely infused with dark energy, but with less space and everything closer together, gravity had the upper hand, and it was slowly braking the expansion. But eventually, after numerous billion years of expansion, the growing abundance of dark energy caused the expansion of the Universe to slowly begin to accelerate. Dark energy in its simplest formulation takes the form of the cosmological constant term in Einstein's field equations of general relativity, but its composition and mechanism are unknown and, more generally, the details of its equation of state and relationship with the Standard Model of particle physics continue to be investigated both observationally and theoretically.

All of this cosmic evolution after the inflationary epoch can be rigorously described and modeled by the ΛCDM model of cosmology, which uses the independent frameworks of quantum mechanics and Einstein's General Relativity. As noted above, there is no well-supported model describing the action prior to 10−15 seconds or so. Apparently a new unified theory of quantum gravitation is needed to break this barrier. Understanding this earliest of eras in the history of the Universe is currently one of the greatest unsolved problems in physics.

Underlying assumptions

The Big Bang theory depends on two major assumptions: the universality of physical laws, and the cosmological principle.[citation needed] The cosmological principle states that on large scales the Universe is homogeneous and isotropic.

These ideas were initially taken as postulates, but today there are efforts to test each of them. For example, the first assumption has been tested by observations showing that largest possible deviation of the fine structure constant over much of the age of the universe is of order 10−5. Also, general relativity has passed stringent tests on the scale of the solar system and binary stars while extrapolation to cosmological scales has been validated by the empirical successes of various aspects of the Big Bang theory.

If the large-scale Universe appears isotropic as viewed from Earth, the cosmological principle can be derived from the simpler Copernican principle, which states that there is no preferred (or special) observer or vantage point. To this end, the cosmological principle has been confirmed to a level of 10−5 via observations of the CMB. The Universe has been measured to be homogeneous on the largest scales at the 10% level.

FLRW metric

General relativity describes spacetime by a metric, which determines the distances that separate nearby points. The points, which can be galaxies, stars, or other objects, themselves are specified using a coordinate chart or "grid" that is laid down over all spacetime. The cosmological principle implies that the metric should be homogeneous and isotropic on large scales, which uniquely singles out the Friedmann–Lemaître–Robertson–Walker metric (FLRW metric). This metric contains a scale factor, which describes how the size of the Universe changes with time. This enables a convenient choice of a coordinate system to be made, called comoving coordinates. In this coordinate system, the grid expands along with the Universe, and objects that are moving only due to the expansion of the Universe remain at fixed points on the grid. While their coordinate distance (comoving distance) remains constant, the physical distance between two such comoving points expands proportionally with the scale factor of the Universe.

The Big Bang is not an explosion of matter moving outward to fill an empty universe. Instead, space itself expands with time everywhere and increases the physical distance between two comoving points. Because the FLRW metric assumes a uniform distribution of mass and energy, it applies to our Universe only on large scales—local concentrations of matter such as our galaxy are gravitationally bound and as such do not experience the large-scale expansion of space.

Horizons

An important feature of the Big Bang spacetime is the presence of horizons. Since the Universe has a finite age, and light travels at a finite speed, there may be events in the past whose light has not had time to reach us. This places a limit or a past horizon on the most distant objects that can be observed. Conversely, because space is expanding, and more distant objects are receding ever more quickly, light emitted by us today may never "catch up" to very distant objects. This defines a future horizon, which limits the events in the future that we will be able to influence. The presence of either type of horizon depends on the details of the FLRW model that describes our Universe. Our understanding of the Universe back to very early times suggests that there is a past horizon, though in practice our view is also limited by the opacity of the Universe at early times. So our view cannot extend further backward in time, though the horizon recedes in space. If the expansion of the Universe continues to accelerate, there is a future horizon as well.

Observational evidence

The earliest and most direct kinds of observational evidence are the Hubble-type expansion seen in the redshifts of galaxies, the detailed measurements of the cosmic microwave background, the abundance of light elements (see Big Bang nucleosynthesis), and today also the large scale distribution and apparent evolution of galaxies which are predicted to occur due to gravitational growth of structure in the standard theory. These are sometimes called "the four pillars of the Big Bang theory".

Hubble's law and the expansion of space

Observations of distant galaxies and quasars show that these objects are redshifted—the light emitted from them has been shifted to longer wavelengths. This can be seen by taking a frequency spectrum of an object and matching the spectroscopic pattern of

emission lines or

absorption lines corresponding to atoms of the chemical elements interacting with the light. These redshifts are uniformly isotropic, distributed evenly among the observed objects in all directions. If the redshift is interpreted as a

Doppler shift, the recessional velocity of the object can be calculated. For some galaxies, it is possible to estimate distances via the cosmic distance ladder. When the recessional velocities are plotted against these distances, a linear relationship known as Hubble's law is observed:

- v = H0D,

where

- v is the recessional velocity of the galaxy or other distant object,

- D is the comoving distance to the object, and

- H0 is Hubble's constant, measured to be 70.4 +1.3

−1.4 km/s/Mpc by the WMAP probe.

Hubble's law has two possible explanations. Either we are at the center of an explosion of galaxies—which is untenable given the Copernican Principle—or the Universe is uniformly expanding everywhere. This universal expansion was predicted from general relativity by Alexander Friedmann in 1922 and Georges Lemaître in 1927, well before Hubble made his 1929 analysis and observations, and it remains the cornerstone of the Big Bang theory as developed by Friedmann, Lemaître, Robertson and Walker.

The theory requires the relation v = HD to hold at all times, where D is the comoving distance, v is the recessional velocity, and v, H, and D vary as the Universe expands (hence we write H0 to denote the present-day Hubble "constant"). For distances much smaller than the size of the observable Universe, the Hubble redshift can be thought of as the Doppler shift corresponding to the recession velocity v. However, the redshift is not a true Doppler shift, but rather the result of the expansion of the Universe between the time the light was emitted and the time that it was detected.

That space is undergoing metric expansion is shown by direct observational evidence of the Cosmological Principle and the Copernican Principle, which together with Hubble's law have no other explanation. Astronomical redshifts are extremely isotropic and homogenous, supporting the Cosmological Principle that the Universe looks the same in all directions, along with much other evidence. If the redshifts were the result of an explosion from a center distant from us, they would not be so similar in different directions.

Measurements of the effects of the cosmic microwave background radiation on the dynamics of distant astrophysical systems in 2000 proved the Copernican Principle, that the Earth is not in a central position, on a cosmological scale. Radiation from the Big Bang was demonstrably warmer at earlier times throughout the Universe. Uniform cooling of the cosmic microwave background over billions of years is explainable only if the Universe is experiencing a metric expansion, and excludes the possibility that we are near the unique center of an explosion.

Cosmic microwave background radiation

WMAP image of the cosmic microwave background radiation

During the first few days of the Universe, the Universe was in full thermal equilibrium, with photons being continually emitted and absorbed, giving the radiation a blackbody spectrum. As the Universe expanded, it cooled to a temperature at which photons could no longer be created or destroyed. The temperature was still high enough for electrons and nuclei to remain unbound, however, and photons were constantly "reflected" from these free electrons through a process called Thomson scattering. Because of this repeated scattering, the early Universe was opaque to light.

When the temperature fell to a few thousand Kelvin, electrons and nuclei began to combine to form atoms, a process known as recombination. Since photons scatter infrequently from neutral atoms, radiation decoupled from matter when nearly all the electrons had recombined, at the epoch of last scattering, 379,000 years after the Big Bang. These photons make up the CMB that is observed today, and the observed pattern of fluctuations in the CMB is a direct picture of the Universe at this early epoch. The energy of photons was subsequently redshifted by the expansion of the Universe, which preserved the blackbody spectrum but caused its temperature to fall, meaning that the photons now fall into the microwave region of the electromagnetic spectrum. The radiation is thought to be observable at every point in the Universe, and comes from all directions with (almost) the same intensity.

In 1964, Arno Penzias and Robert Wilson accidentally discovered the cosmic background radiation while conducting diagnostic observations using a new microwave receiver owned by Bell Laboratories. Their discovery provided substantial confirmation of the general CMB predictions—the radiation was found to be isotropic and consistent with a blackbody spectrum of about 3 K—and it pitched the balance of opinion in favor of the Big Bang hypothesis. Penzias and Wilson were awarded a Nobel Prize for their discovery.

The cosmic microwave background spectrum measured by the FIRAS instrument on the COBE satellite is the most-precisely measured black body spectrum in nature. The data points and error bars on this graph are obscured by the theoretical curve.

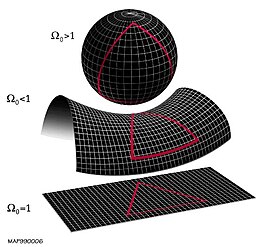

In 1989, NASA launched the Cosmic Background Explorer satellite (COBE), and the initial findings, released in 1990, were consistent with the Big Bang's predictions regarding the CMB. COBE found a residual temperature of 2.726 K and in 1992 detected for the first time the fluctuations (anisotropies) in the CMB, at a level of about one part in 105. John C. Mather and George Smoot were awarded the Nobel Prize for their leadership in this work. During the following decade, CMB anisotropies were further investigated by a large number of ground-based and balloon experiments. In 2000–2001, several experiments, most notably BOOMERanG, found the Universe to be almost spatially flat by measuring the typical angular size (the size on the sky) of the anisotropies. (See shape of the Universe.)

In early 2003, the first results of the Wilkinson Microwave Anisotropy Probe (WMAP) were released, yielding what were at the time the most accurate values for some of the cosmological parameters. This spacecraft also disproved several specific cosmic inflation models, but the results were consistent with the inflation theory in general, it confirms too that a sea of cosmic neutrinos permeates the Universe, a clear evidence that the first stars took more than a half-billion years to create a cosmic fog. A new space probe named Planck, with goals similar to WMAP, was launched in May 2009. It is anticipated to soon provide even more accurate measurements of the CMB anisotropies. Many other ground- and balloon-based experiments are also currently running; see Cosmic microwave background experiments.

The background radiation is exceptionally smooth, which presented a problem in that conventional expansion would mean that photons coming from opposite directions in the sky were coming from regions that had never been in contact with each other. The leading explanation for this far reaching equilibrium is that the Universe had a brief period of rapid exponential expansion, called inflation. This would have the effect of driving apart regions that had been in equilibrium, so that all the observable Universe was from the same equilibrated region.

Abundance of primordial elements

Using the Big Bang model it is possible to calculate the concentration of helium-4, helium-3, deuterium and lithium-7 in the Universe as ratios to the amount of ordinary hydrogen, H. All the abundances depend on a single parameter, the ratio of photons to baryons, which itself can be calculated independently from the detailed structure of CMB fluctuations. The ratios predicted (by mass, not by number) are about 0.25 for 4

He/H, about 10−3 for 2

H/H, about 10−4 for 3

He/H and about 10−9 for 7

Li/H.

The measured abundances all agree at least roughly with those predicted from a single value of the baryon-to-photon ratio. The agreement is excellent for deuterium, close but formally discrepant for 4

He, and a factor of two off for 7

Li; in the latter two cases there are substantial systematic uncertainties. Nonetheless, the general consistency with abundances predicted by BBN is strong evidence for the Big Bang, as the theory is the only known explanation for the relative abundances of light elements, and it is virtually impossible to "tune" the Big Bang to produce much more or less than 20–30% helium. Indeed there is no obvious reason outside of the Big Bang that, for example, the young Universe (i.e., before star formation, as determined by studying matter supposedly free of stellar nucleosynthesis products) should have more helium than deuterium or more deuterium than 3

He, and in constant ratios, too.

Galactic evolution and distribution

This panoramic view of the entire near-infrared sky reveals the distribution of galaxies beyond the Milky Way. The galaxies are color coded by redshift.

Detailed observations of the morphology and distribution of galaxies and quasars provide strong evidence for the Big Bang. A combination of observations and theory suggest that the first quasars and galaxies formed about a billion years after the Big Bang, and since then larger structures have been forming, such as galaxy clusters and superclusters. Populations of stars have been aging and evolving, so that distant galaxies (which are observed as they were in the early Universe) appear very different from nearby galaxies (observed in a more recent state). Moreover, galaxies that formed relatively recently appear markedly different from galaxies formed at similar distances but shortly after the Big Bang. These observations are strong arguments against the steady-state model. Observations of star formation, galaxy and quasar distributions and larger structures agree well with Big Bang simulations of the formation of structure in the Universe and are helping to complete details of the theory.

Other lines of evidence

After some controversy, the age of Universe as estimated from the Hubble expansion and the CMB is now in good agreement with (i.e., slightly larger than) the ages of the oldest stars, both as measured by applying the theory of stellar evolution to globular clusters and through radiometric dating of individual Population II stars.

The prediction that the CMB temperature was higher in the past has been experimentally supported by observations of temperature-sensitive emission lines in gas clouds at high redshift. This prediction also implies that the amplitude of the Sunyaev–Zel'dovich effect in

clusters of galaxies does not depend directly on redshift; this seems to be roughly true, but unfortunately the amplitude does depend on cluster properties which do change substantially over cosmic time, so a precise test is impossible.

A rotational mouse is a type of computer mouse which attempts to expand traditional mouse functionality. The objective of rotational mice is to facilitate three degrees of freedom (3DOF) for human-computer interaction by adding a third dimensional input, yaw (or Rz), to the existing x and y dimensional inputs. There have been several attempts to develop rotating mice, using a variety of mechanisms to detect rotation.

A rotational mouse is a type of computer mouse which attempts to expand traditional mouse functionality. The objective of rotational mice is to facilitate three degrees of freedom (3DOF) for human-computer interaction by adding a third dimensional input, yaw (or Rz), to the existing x and y dimensional inputs. There have been several attempts to develop rotating mice, using a variety of mechanisms to detect rotation. Mouse rage is a particular type of computer rage. It is a behavioural response provoked by failure or unexpected results observed when using a computer. In particular, this is expressed by focusing the negative emotion incurred by the event on a computer mouse.

Mouse rage is a particular type of computer rage. It is a behavioural response provoked by failure or unexpected results observed when using a computer. In particular, this is expressed by focusing the negative emotion incurred by the event on a computer mouse.

Mice often function as an interface for PC-based computer games and sometimes for video game consoles.

Mice often function as an interface for PC-based computer games and sometimes for video game consoles.